一、介紹串流的分類

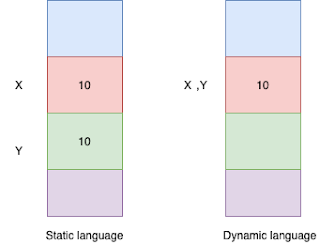

由於需要直播視頻功能,然後把視頻推流到服務端,本地在進行拉流播放的流程。所以這個過程中,我們需要首先來把服務端這個中間環節的工作搞定,後續再弄推流和拉流功能。現在推流大部分都是使用RTMP/HLS協議的,關於這兩個協議的區別:

資料來源:

http://www.itread01.com/articles/1476627687.html

這邊教學是以rtmp(延遲低)

二、教學

1、首先你要一台server,作業系統為ubuntu-16-04-LTS,然後在裡面架起nginx server

2、下載和安裝nginx+nginx-rtmp-module

安裝必須的程序或包

sudo apt-get install build-essential libpcre3 libpcre3-dev libssl-dev

下載nginx狀語從句:nginx-rtmp-module

首先,用戶在目錄下創建temp文件夾數

mkdir〜/ temp

cd〜/ temp

然後,下載分別nginx狀語從句:nginx-rtmp-module

安裝git(下載用來nginx-rtmp-module源碼)

sudo apt-get install git

下載 nginx

wget http://nginx.org/download/nginx-1.12.0.tar.gz

下載 nginx-rtmp-module

git clone git://github.com/arut/nginx-rtmp-module.git

安裝nginx狀語從句:nginx-rtmp-module

解壓

tar xzf nginx-1.12.0.tar.gz

進入目錄

cd nginx-1.12.0

最後bulid nginx和nginx-rtmp-module

./configure --add-module=/path/to/nginx-rtmp-module

make

make install

幾個版本的nginx(1.3.14 - 1.5.0)還需要添加http_ssl_module:

./configure --add-module=/path/to/nginx-rtmp-module --with-http_ssl_module

用於構建調試版本的nginx add --with-debug

./configure --add-module=/path/to-nginx/rtmp-module --with-debug

這裡到nginx狀語從句:nginx-rtmp-module就安裝完成了

關於nginx啟動,關閉,重啟等命令:

sudo /usr/local/nginx/sbin/nginx //啟動

sudo /usr/local/nginx/sbin/nginx -s stop //關閉

sudo /usr/local/nginx/sbin/nginx -s reopen

sudo /usr/local/nginx/sbin/nginx -s reload //重新加載配置文件

用/usr/local/nginx/sbin/nginx -h可以調出幫助頁

可以啟動測試一下,

sudo /usr/local/nginx/sbin/nginx

然後瀏覽器進入 http://localhost:80

成功以後就要,修改nginx配置

配置文件位置是 /usr/local/nginx/conf/nginx.conf

先備份,有備無患

mv /usr/local/nginx/conf/nginx.conf /usr/local/nginx/conf/nginx.conf.bk

然後再編輯 nginx.conf

vim /usr/local/nginx/conf/nginx.conf

打開時為空,向裡面寫入:

rtmp {

server {

listen 1935;

chunk_size 4000;

# TV mode: one publisher, many subscribers

application mytv {

# enable live streaming

live on;

# record first 1K of stream

record all;

record_path /tmp/av;

record_max_size 1K;

# append current timestamp to each flv

record_unique on;

# publish only from localhost

allow publish 127.0.0.1;

deny publish all;

#allow play all;

}

# Transcoding (ffmpeg needed)

application big {

live on;

# On every pusblished stream run this command (ffmpeg)

# with substitutions: $app/${app}, $name/${name} for application & stream name.

#

# This ffmpeg call receives stream from this application &

# reduces the resolution down to 32x32. The stream is the published to

# 'small' application (see below) under the same name.

#

# ffmpeg can do anything with the stream like video/audio

# transcoding, resizing, altering container/codec params etc

#

# Multiple exec lines can be specified.

exec ffmpeg -re -i rtmp://localhost:1935/$app/$name -vcodec flv -acodec copy -s 32x32

-f flv rtmp://localhost:1935/small/${name};

}

application small {

live on;

# Video with reduced resolution comes here from ffmpeg

}

application webcam {

live on;

# Stream from local webcam

exec_static ffmpeg -f video4linux2 -i /dev/video0 -c:v libx264 -an

-f flv rtmp://localhost:1935/webcam/mystream;

}

application mypush {

live on;

# Every stream published here

# is automatically pushed to

# these two machines

push rtmp1.example.com;

push rtmp2.example.com:1934;

}

application mypull {

live on;

# Pull all streams from remote machine

# and play locally

pull rtmp://rtmp3.example.com pageUrl=www.example.com/index.html;

}

application mystaticpull {

live on;

# Static pull is started at nginx start

pull rtmp://rtmp4.example.com pageUrl=www.example.com/index.html name=mystream static;

}

# video on demand

application vod {

play /var/flvs;

}

application vod2 {

play /var/mp4s;

}

# Many publishers, many subscribers

# no checks, no recording

application videochat {

live on;

# The following notifications receive all

# the session variables as well as

# particular call arguments in HTTP POST

# request

# Make HTTP request & use HTTP retcode

# to decide whether to allow publishing

# from this connection or not

on_publish http://localhost:8080/publish;

# Same with playing

on_play http://localhost:8080/play;

# Publish/play end (repeats on disconnect)

on_done http://localhost:8080/done;

# All above mentioned notifications receive

# standard connect() arguments as well as

# play/publish ones. If any arguments are sent

# with GET-style syntax to play & publish

# these are also included.

# Example URL:

# rtmp://localhost/myapp/mystream?a=b&c=d

# record 10 video keyframes (no audio) every 2 minutes

record keyframes;

record_path /tmp/vc;

record_max_frames 10;

record_interval 2m;

# Async notify about an flv recorded

on_record_done http://localhost:8080/record_done;

}

# HLS

# For HLS to work please create a directory in tmpfs (/tmp/hls here)

# for the fragments. The directory contents is served via HTTP (see

# http{} section in config)

#

# Incoming stream must be in H264/AAC. For iPhones use baseline H264

# profile (see ffmpeg example).

# This example creates RTMP stream from movie ready for HLS:

#

# ffmpeg -loglevel verbose -re -i movie.avi -vcodec libx264

# -vprofile baseline -acodec libmp3lame -ar 44100 -ac 1

# -f flv rtmp://localhost:1935/hls/movie

#

# If you need to transcode live stream use 'exec' feature.

#

application hls {

live on;

hls on;

hls_path /tmp/hls;

}

# MPEG-DASH is similar to HLS

application dash {

live on;

dash on;

dash_path /tmp/dash;

}

}

}

# HTTP can be used for accessing RTMP stats

http {

server {

listen 8080;

# This URL provides RTMP statistics in XML

location /stat {

rtmp_stat all;

# Use this stylesheet to view XML as web page

# in browser

rtmp_stat_stylesheet stat.xsl;

}

location /stat.xsl {

# XML stylesheet to view RTMP stats.

# Copy stat.xsl wherever you want

# and put the full directory path here

root /path/to/stat.xsl/;

}

location /hls {

# Serve HLS fragments

types {

application/vnd.apple.mpegurl m3u8;

video/mp2t ts;

}

root /tmp;

add_header Cache-Control no-cache;

}

location /dash {

# Serve DASH fragments

root /tmp;

add_header Cache-Control no-cache;

}

}

}

重啟 ngnix

使用sudo /usr/local/nginx/sbin/nginx -s stop關閉nginx,再sudo /usr/local/nginx/sbin/nginx開啟nginx

至此,直播服務器搭建已完成。

三、如何測試

1、串流形式為:

rtmp://rtmp.example.com/app[/name]

2、測試有兩種,可以到github找推流與拉流的android 手機程式

付上網址

https://github.com/begeekmyfriend/yasea

https://github.com/ant-media/LiveVideoBroadcaster

也可以直接用vlc做串流觀看

https://www.youtube.com/watch?v=VsahDWNByVQ

3、上傳mp4做拉流的測試

安裝 ffmpeg

ffmpeg 的作用是將本地視頻文件推流至直播服務器,模擬直播時的推流過程,主要用作開發時測試用

安裝很簡單

sudo apt-get update

sudo apt-get install ffmpeg

推流和拉流測試

使用這裡ffmpeg狀語從句:視頻播放器簡單測試直播服務區是否成功完成安裝,

推流命令

ffmpeg -re -i視頻文件路徑/視頻文件.mp4 -vcodec libx264 -vprofile baseline -acodec aac -ar 44100 -strict -2 -ac 1 -f flv -s 1280x720 -q 10 rtmp:// localhost:1935 / hls /流

拉流

這裡使用播放器測試,打開網址open url可以輸入兩種協議的鏈接,為分別rtmp或http

RTMP流:RTMP://本地主機:1935 / HLS /流

HLS流:http:// localhost:8080 / hls / stream.m3u8

後面注意的hls狀語從句:stream的命名要相對應。

沒有轉換的流式傳輸(由於`test.mp4`編解碼器與RTMP兼容)

ffmpeg -re -i /var/Videos/test.mp4 -c copy -f flv rtmp:// localhost / myapp / mystream

流媒體和編碼音頻(AAC)和視頻(H264)需要libx264和libfaac

ffmpeg -re -i /var/Videos/test.mp4 -c:v libx264 -c:a libfaac -ar 44100 -ac 1 -f flv rtmp:// localhost / myapp / mystream

流式處理和編碼音頻(MP3)和視頻(H264)需要`libx264`和`libmp3lame`

ffmpeg -re -i /var/Videos/test.mp4 -c:v libx264 -c:a libmp3lame -ar 44100 -ac 1 -f flv rtmp:// localhost / myapp / mystream

流媒體和編碼音頻(Nellymoser)和視頻(Sorenson H263)

ffmpeg -re -i /var/Videos/test.mp4 -c:v flv -c:nellymoser -ar 44100 -ac 1 -f flv rtmp:// localhost / myapp / mystream

從網絡攝像頭髮布視頻(Linux)

ffmpeg -f video4linux2 -i / dev / video0 -c:v libx264 -an -f flv rtmp:// localhost / myapp / mystream

從網絡攝像頭髮布視頻(MacOS)

ffmpeg -f avfoundation -framerate 30 -i“0”-c:v libx264 -an -f flv rtmp:// localhost / myapp / mystream

玩ffplay

ffplay rtmp:// localhost / myapp / mystream

資料來源:

http://www.itread01.com/articles/1476627687.html

https://github.com/arut/nginx-rtmp-module/wiki/Getting-started-with-nginx-rtmp

http://mythsand.me/2017/10/22/%E7%9B%B4%E6%92%AD%E6%9C%8D%E5%8A%A1%E5%99%A8%E6%90%AD%E5%BB%BA%EF%BC%9Aubuntu-16-04-LTS-nginx-nginx-rtmp-module/

https://github.com/begeekmyfriend/yasea

https://github.com/ant-media/LiveVideoBroadcaster

https://www.youtube.com/watch?v=VsahDWNByVQ

留言

張貼留言